Blog Series:

- Creating Azure Data Lake

- PowerShell and Options to upload data to Azure Data Lake Store

- Using Azure Data Lake Store .NET SDK to Upload Files

- Creating Azure Data Analytics

- Azure Data Lake Analytics: Database and Tables

- Azure Data Lake Analytics: Populating & Querying Tables

- Azure Data Lake Analytics: How To Extract JSON Files

- Azure Data Lake Analytics: U-SQL C# Programmability

- Azure Data Lake Analytics: Job Execution Time and Cost

In running an intensive U-SQL job against a large number of files and records, I will show the performance diagnostics and its estimated cost.

I ran a U-SQL job against 4,272 JSON files with about 95,000 rows.

1.2 hours to complete

Parallelism was set to 5 AU.

An AU is an analytic unit which is approximately a compute resource similar to 2 CPU cores and 6 GB of RAM as of Oct 2016. For details and background read https://blogs.msdn.microsoft.com/azuredatalake/2016/10/12/understanding-adl-analytics-unit/

To see diagnostics, first, click Load Job Profile.

Click on Diagnostics tab

Click on Resource usage

In the AU Usage tab, 5 processes have been fully utilized throughout the 1 hr 7 minutes of execution.

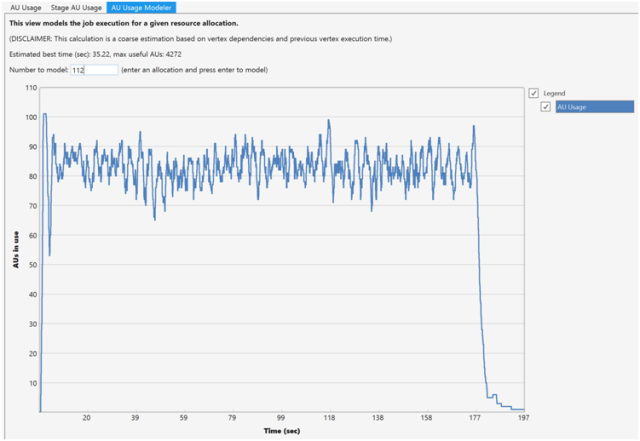

The AU Usage Modeler provides a rough estimation of the number of AU for the best time. Here it estimates 4,272 AUs for 35.22 seconds of execution time.

Interestingly, 4,272 is the same number of JSON files to be analyzed. So, I am assuming to get best time for estimation, it would desire to allocate an AU for each file. There is probably more explanation to this but just commenting on the observation.

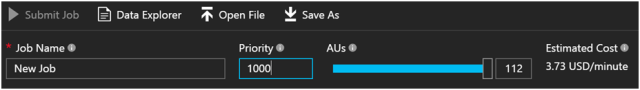

When going to job submission settings, I observed the max AUs that can be allocated is 112.

Adjusting the Number to model to 112 we see an estimated 197 seconds of execution time.

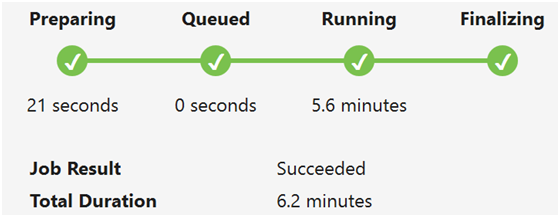

In submitting the job again at 112 AUs for parallelism, the outcome is

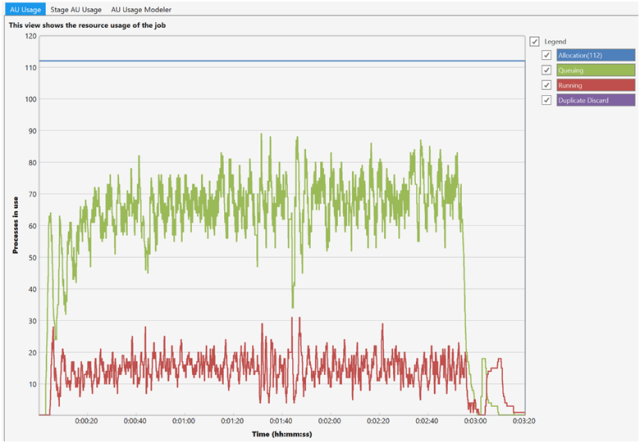

AU Usage diagnostics

So the difference between the estimated time and actual for 112 AUs is that the actual tested is 6.2 minutes compared to about 2.5 minutes. So about 2.5 times longer than estimated.

The graph above you can see there is roughly 65-70% usage at 112 AUs compared to almost 100% usage at 5 AUs. But at 5 AUs, it took 10 times longer.

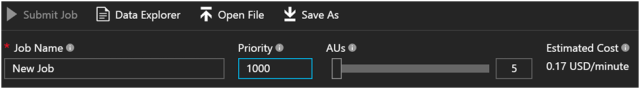

To do the price comparison, I couldn’t find the exact cost per each job execution, but I can guess based on the pricing stated when you open a U-SQL editor in the Azure Portal.

At 5 AUs, the cost is $0.17USD/minute. 72 minutes of execution costs $12.24

At 112 AUs, cost is $3.73USD/minute. 6.2 minutes of execution costs $23.12

| AUs | Execution time | Cost / min USD | Est. Total Cost |

| 5 | 67 mins | $0.17 | 11.39 |

| 112 | 3.3 mins | $3.73 | 12.30 |

So based on my analysis it seems going for max AUs is a good deal where it cost about a dollar more yet save over an hour of time. I would assume the cost difference matters with other varying factors such as data size, # of records, data structure and the amount of number crunching.

If there is any feedback on my analysis, feel free to drop me a comment below. Hope my performance and cost analysis at least gives a ball park idea of what to expect.