The Kubernetes AI Toolchaing Operator (AKS) for AKS has a RAG engine feature that allows to “chat” and ask questions on your private documents in conjuction with a KATO hosted language model, such as Phi-4, to help ground the gen AI responses.

In previous blog posts and videos of mine, I walk through a comprehensive setup of KAITO installation and ph-4 model deployment. I will go to through what RAG is and the next blog post will walk through installatio and demonstration.

Retrieval-Augmented Generation (RAG) is one of the most reliable ways to make LLM responses grounded in your private data such as corporate policy documentation, code, runbooks, PDFs. The challenge is usually the plumbing of embeddings, vector indexing, chunking, retrieval, prompt assembly, and wiring the whole thing to an large language model endpoint.

For the authoritative docs, see: https://kaito-project.github.io/kaito/docs/rag

Assuming you have KAITO installed in AKS, RagEngine provides

- A Kubernetes CRD (

kind: RAGEngine) that deploys a ready-to-use RAG service. - Document indexing APIs (create/list indexes, add/list/update documents).

- Retrieval + generation APIs that call your LLM endpoint with retrieved context.

- Optional persistence for vector indexes via PVC (depending on chart/CRD version).

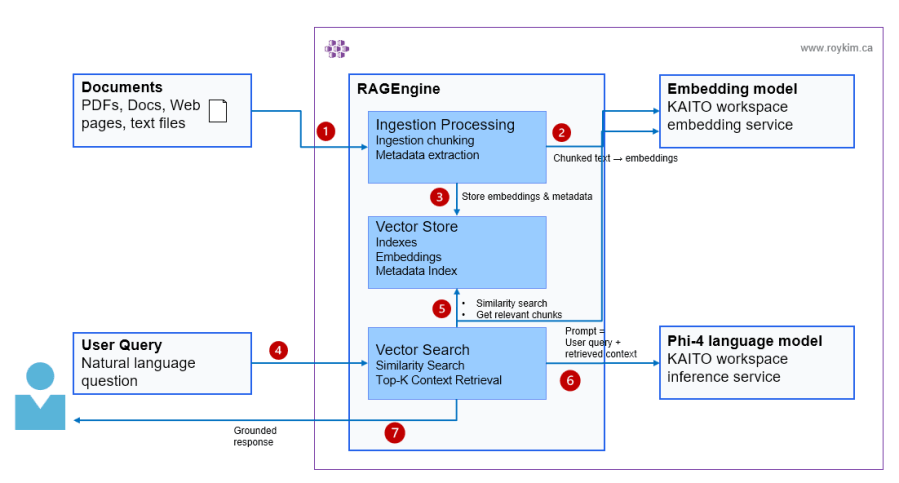

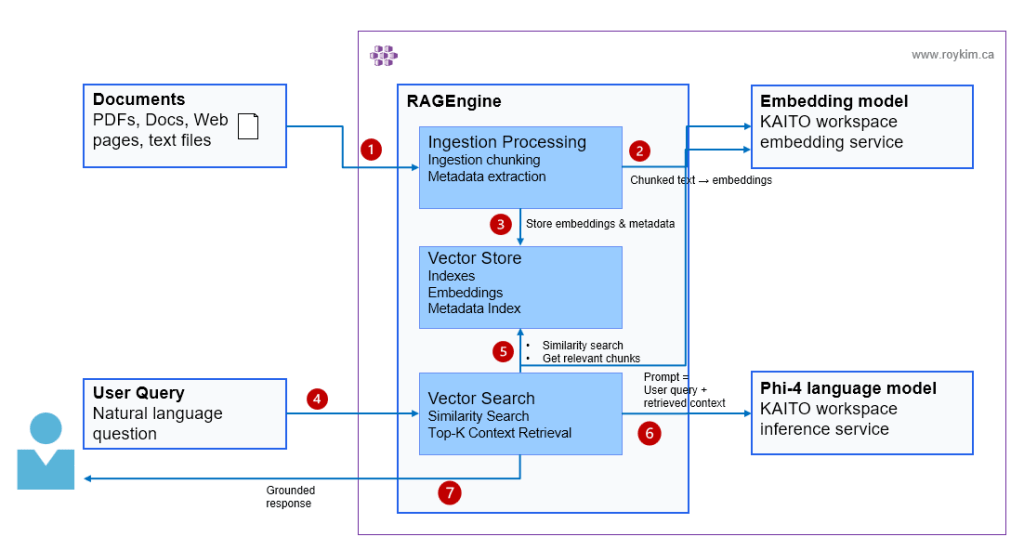

This is my own diagram to understand the high-level architecture.

- Ingestion / Indexing: You send documents to RagEngine.

- Embedding: RagEngine turns text into vectors using embedding model that can be AKS hosted.

- Vector store: RagEngine stores vectors and metadata in its Vectore Store index.

- User: presents a prompt or question about the indexed documents to the RagEngine via API.

- Query: RagEngine retrieves top-matching chunks through Vector Search against the index. This would involve the query to be converted to an embedding for similarity search.

- Generation: RagEngine calls your LLM endpoint (e.g., Phi-4

/v1/chat/completions) - Retrieval: RagEngine responds back to the user with with retrieved context and returns an grounded answer.

KAITO RagEngine packages that plumbing into a Kubernetes-native experience. You define a RAGEngine custom resource (CRD), point it at an existing inference endpoint (e.g. Phi-4 model), choose an embedding strategy (local or remote), and you get a service that can index documents and answer questions against those documents.

Final Remarks

KAITO and RAGEngine gives you full control of your AI technology stack so it helps you align to security and regulatory requirements in your organization. Also you can have more management and control to optimize for cost when at large scale. However, KAITO and RAGEngine is still being developed and the rapidly evolving Gen AI technology landscape and need to be regularly updated and managed. I would consider this be an AI platform option along with other 3rd party AI providers