The Kubernetes AI Toolchain Operator (KAITO) setup on AKS provisions GPU node pools. This blog is a explores the VM instance types that uses the NVIDIA GPUs that are allocated to provisioned AKS node pools. These AKS nodepools host Kaito supported language models to expose an inference API service.

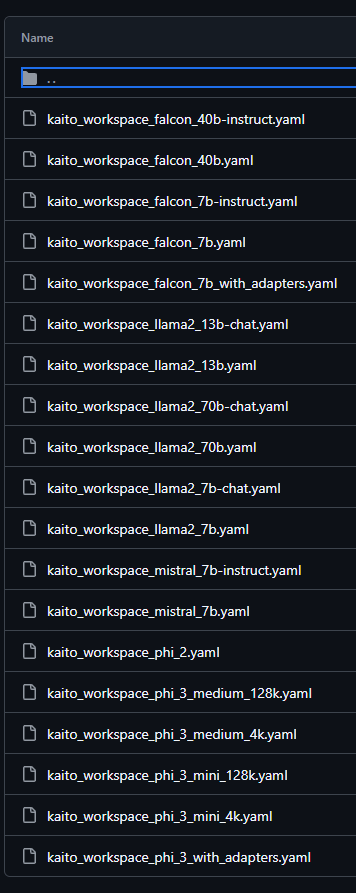

The KAITO project repo provides examples of AI inference models that are deployed as a Kaito workspace. You can find the full list https://github.com/kaito-project/kaito/tree/main/examples/inference

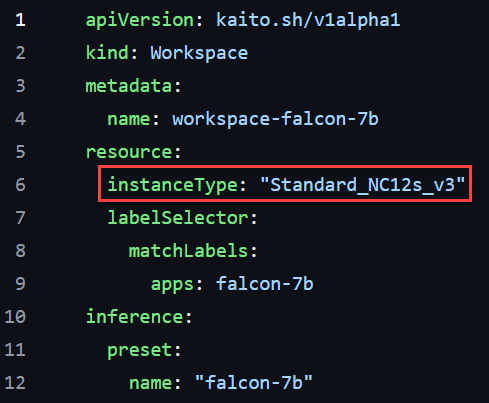

Here is one example of the Falcon 7b model that references a VM instance type that has an NVIDIA GPU

For easy analysis, I made the following table of some of the inference model YAML files from the above list and their defined VM instance type. I just want to point out the three GPU VM instance types that are used across all the example yaml files.

| Model | VM Instance Type | vCPUs | Memory(GB) | Accelerator GPU | Cost USD/month |

| falcon-7b llama-2-13b-chat mistral-7b | Standard_NC12s_v3 | 12 Intel Xeon E5-2690 v4 (Broadwell) | 224 | 2 Nvidia Tesla V100 GPU (16GB) | $4,870 |

| falcon-40b llama-2-70b phi-3-medium | Standard_NC96ads_A100_v4 | 96 | 880 | 4 Nvidia PCIe A100 GPU (80GB) | $13,948 |

| phi-3-mini | Standard_NC6s_v3 | 6 | 112 | 1 Nvidia Tesla V100 GPU (16GB) | $2,435 |

The first two set of models are large language models that have billions of parameters. As you can these VM instance types come with Nvidia GPUs along with a large amount of CPU and memory. Even more, I checked against the Azure pricing calculator to find USD cost per month. A very staggering amount of cost. So when deploying and operating these models into their GPU node pools, you want to save on costs by deprovisioning the Kaito worspace and nodepool or shut down the node pool when the inference service is not in use.

Note that you can modify the inference yaml files to use any other GPU accelerated VM as you like by choosing from the NC sub-family GPU accelerated VM series

Here’s some more background info of those NVIDIA GPUs.

NC-series VMs are ideal for training complex machine learning models and running AI applications. The NVIDIA GPUs provide significant acceleration for computations typically involved in deep learning and other intensive training tasks.

NCv3-series VMs are powered by NVIDIA Tesla V100 GPUs. For Nvidia product page read https://www.nvidia.com/en-gb/data-center/tesla-v100/

The NC A100 v4 series is powered by NVIDIA A100 PCIe GPU and third generation AMD EPYC™ 7V13 (Milan) processors. The VMs feature up to 4 NVIDIA A100 PCIe GPUs with 80 GB memory each, up to 96 non-multithreaded AMD EPYC Milan processor cores and 880 GiB of system memory.

https://www.nvidia.com/en-us/data-center/a100/

I hope this gives you a clearer understanding of the GPU node pools and what they provide. So that you are more knowledgeable as you deploy these inference models with the appropriate hardware compute specifications.

See my other blog post giving a step by step deployment of Kaito at Effortlessly Setup Kaito v0.3.1 on Azure Kubernetes Service To Deploy A Large Language Model.